Waifu2x-Caffe's GUI and CUI

Waifu2x-caffe supports both a graphical user interface(GUI) and a character user interface(CUI). Nagadomi the developer of Waifu2x created a post where Nagadomi mapped various features on the demo application site to Waifu2x-Caffe's GUI and I recommeded anyone thats familiar with Waifu2x's demo application site to check it out here. For a more detailed instruction on Waifu2x-Caffe's installation process or general information in regards to using Waifu2x-Caffe can be found on Waifu2x-Caffe's github respository page.

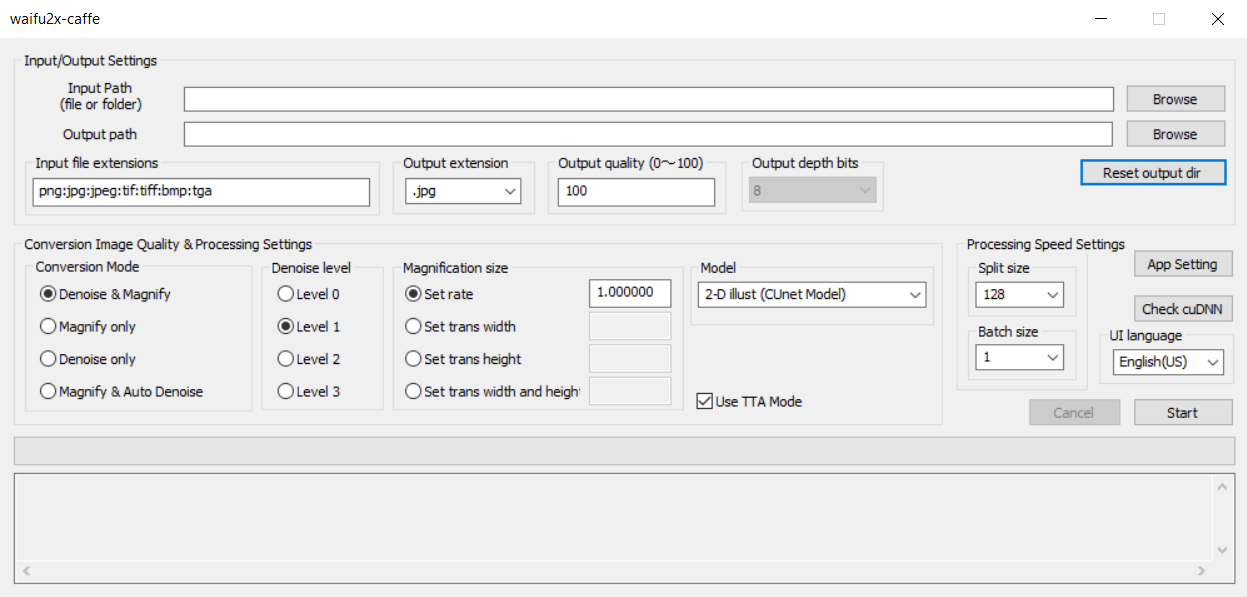

Waifu2x-Caffe's GUI Overview

Waifu2x-Caffe can support a single input, whether it's just one image or an entire folder with multiple images. It supports various file extensions as seen in the image, but it does not support video files. Despite how video files are just made up of a whole lot of images, Waifu2x-Caffe does not support video files, though Video2x, 3rd-party software does support video files which we'll talk about later. If you really want to use Waifu2x-Caffe on videos, then I suggest finding someway to extract all image frames from the video into one folder. There are many ways to extract image frames from a video, but the best way would be through using FFmpeg. FFmpeg is a very powerful open source software that can handle/process videos, audios, and other multimedia files. It is an extremely powerful and useful tool to learn and use, though the downside would be that it can be quite difficult to learn at first.

Conversion Image Quality & Processing Settings

The conversion mode allows users to choose whether they want to upscale the image, denoise the image, or both. The most important thing to note here is the denoise level. Denoising should only be used when there is noise or compression involved. Level 0 denoise should be used when the image has only gone through one compression, while level 1 is for those images that have gone through a couple compressions, level 2 for those that have gone through many compressions such as those memes that keep getting reposted resulting in a degraded and god-awful image quality, and level 3 should only be used to get rid of compression artifacts. Denoising can result in smoother lines or even losing the small details, so it's important to ensure you choose the best denoising levels for your needs. When in doubt just test each option and compare your results.

Model and TTA Mode

There are a variety of models used by Waifu2x-Caffe and more information for each model can be found on their github repository. A translation for the various models include:

- * 2D illustration (RGB model): Model for 2D illustration that converts all RGB of the image

- * Photo / animation (Photo model): Model for photo / animation

- * 2D illustration (UpRGB model): A model that converts faster than 2D illustration (RGB model) with the same or better image quality. However, it consumes more memory (VRAM) than the RGB model, so adjust the split size if you want to force termination during conversion.

- * Photo / Animation (UpPhoto model): Faster and equivalent than Photo / Animation (Photo model) A model that converts with the above image quality. However, it consumes more memory (VRAM) than the Photo model, so adjust the split size if you want to force termination during conversion.

- * 2D illustration (Y model): Model for 2D illustration that converts only the brightness of the image

- * 2D illustration (UpResNet10 model): A model that converts with higher image quality than 2D illustration (UpRGB model). Please note that the output result of this model will change if the division size is different.

- * 2D illustration (CUnet model): A model that can convert 2D illustrations with the highest image quality among the included models. Please note that the output result of this model will change if the division size is different.

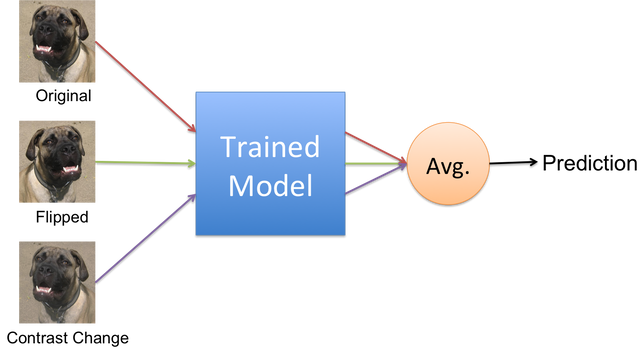

Test time augmentation(TTA) is a way of testing datasets by flipping it multiple times and getting the average for a more accurate prediction.

TTA mode in Waifu2x basically involves flipping the image 8 times, then upscale it and finally average all 8 images in order to have an even higher quality upscaled image. TTA mode can also remove serveral times of artfiacts, stated by Nagadomi who is the creator of Waifu2x and a contributor to Waifu2x-caffe. It should be noted that TTA mode takes 8x as long for the entire upscaling process to finish. In my personal experience I have not been able to find any noticeable difference between an imaged upscaled in experience I have not been able to find any noticeable difference between an image upscaled in TTA mode and one not in TTA mode.

Other Settings

Split size and batch size are for managing the amount of images in batches that Waifu2x-caffe

will process at once. So the bigger the split and batch size the faster the upscaling processes

will take.

The problem with having a bigger split and batch size is the amount of memory it'll use. If the

processor is set to CUDA, then it'll be important to consider VRAM as this setting will use a

lot of it.

Lastly there are the general settings, check cuDNN, and UI language setting. The UI language

setting has 12 selectable languages. The app settings allows various miscellaneous settings and

the most important being able to choose either CPU or CUDA processor. The check cuDNN setting

allows the users to verify if the CUDA processor can be used.

(noise_scale)(Level1)(x1.000000).jpg)

(noise_scale)(Level1)(tta)(x1.000000).jpg)

(scale)(x2.000000).jpg)

(scale)(tta)(x2.000000).jpg)

(noise)(Level0).jpg)

(noise)(Level1).jpg)

(noise)(Level2).jpg)

(noise)(Level3).jpg)

(noise_scale)(Level0)(x2.000000).jpg)

(noise_scale)(Level1)(x2.000000).jpg)

(noise_scale)(Level2)(x2.000000).jpg)

(noise_scale)(Level3)(x2.000000).jpg)